NVIDIA GTC 2025 Keynote Recap: GeForce 5090, AI Factories, Robotics & More

NVIDIA GTC 2025 unveiled groundbreaking AI and GPU innovations, including the GeForce RTX 5090, Grace Blackwell supercomputing, AI-powered robotics, self-driving advancements, and the NVIDIA Dynamo OS. Get the full recap of Jensen Huang’s keynote and what these developments mean for the future of AI, gaming, and automation.

NVIDIA GTC 2025 Key Announcements

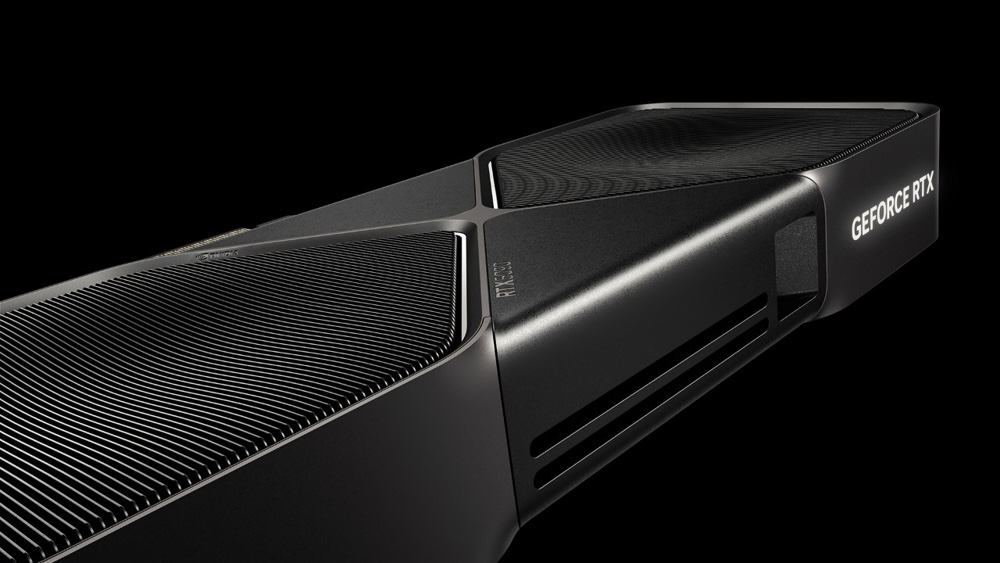

GeForce RTX 5090 – Next-Gen GPU Breakthroughs

Under the hood, the RTX 5090 boasts 21,760 CUDA cores and next-gen ray tracing and tensor cores, delivering roughly 30% higher raster graphics performance over the RTX 4090 in early tests . Its switch to faster GDDR7 memory nearly doubles memory bandwidth (1.8 TB/s vs ~1.0 TB/s on the 4090) for better handling of high-resolution textures and complex scenes . The RTX 5090 introduces DLSS 4 with multi-frame generation, enabling big leaps in frame rates by using AI to create additional frames . Altogether, these architectural improvements give creators and gamers “unprecedented AI horsepower” and the ability to game with full ray tracing at lower latency . In short, the GeForce RTX 5090 pushes the cutting edge in GPU performance while improving power and cooling efficiency – a rare generational feat.

General Motors and NVIDIA Partnership – AI in Vehicles and Manufacturing

NVIDIA announced a broad collaboration with General Motors (GM) to infuse AI across the automaker’s operations – from autonomous and assisted driving to factory automation. Under this partnership, GM will use NVIDIA’s accelerated computing and software platforms to develop next-generation smart vehicles, AI-driven factories, and even robotic systems . For example, GM plans to leverage the NVIDIA DRIVE AGX in-car computer (based on the latest Blackwell GPU architecture) as the brain of its future vehicles, capable of up to 1,000 trillion operations per second to power advanced driver-assistance and in-cabin AI features . This should enable more intelligent and safer self-driving capabilities and personalised in-car experiences.

On the manufacturing side, GM is tapping into NVIDIA Omniverse and Isaac simulation tools to create digital twins of assembly lines and train AI models for factory robotics . By virtually testing production scenarios and AI-powered robots (for tasks like material handling and precision welding), GM can optimise its factories for efficiency and safety before implementing changes in the real world . The partnership’s scope even extends to enterprise AI solutions, as GM looks to accelerate vehicle design and engineering with generative AI and simulation. Overall, this GM–NVIDIA alliance exemplifies how AI and simulation can transform the auto industry: smarter vehicles, more efficient manufacturing, and innovative consumer experiences, all driven by cutting-edge NVIDIA platforms . The impact could be industry-wide, setting new benchmarks for AI use in transportation and production.

NVIDIA Halos – Safeguarding Every Line of Code for Autonomous Driving

NVIDIA introduced NVIDIA Halos, a comprehensive safety framework for autonomous vehicles that treats safety as end-to-end mission – “from the cloud to the car”. Halos unifies NVIDIA’s hardware and software safety technologies with its latest AI research to ensure that every component of an AV system meets rigorous safety standards . It spans everything from safety-focused system-on-chips and the Drive OS (an ASIL-certified automotive operating system) to simulation tools and validation services, all working in concert. Halos defines safety on multiple levels: platform safety (with hundreds of built-in hardware safety mechanisms), algorithmic safety (validation of AI models and perception algorithms), and ecosystem safety (data sets, monitoring, and compliance) . At the development stage, it provides guardrails during design, deployment, and validation to catch issues early . In practice, this means an automaker can pick and choose NVIDIA’s state-of-the-art chips, software modules, and testing tools via Halos to build their AV stack with confidence in its safety .

Notably, NVIDIA is pushing an industry-first approach that has “every line of code safety assessed” in its autonomous driving systems . As CEO Jensen Huang emphasised, NVIDIA is likely “the first company in the world… to have every line of code safety assessed” . This reflects an unprecedented level of scrutiny – independent experts reviewing all software that goes into self-driving cars, which could set a new bar for functional safety in the field. By combining robust engineering practices with AI-driven validation (through simulation in NVIDIA Omniverse and real-world data checks), Halos can potentially accelerate the path to safer self-driving vehicles. It gives developers an “inspection lab” to verify their AI models and sensors in a controlled environment before they hit the road . In summary, NVIDIA Halos is about making autonomous driving as safe as possible, providing a framework where safety is baked into every layer of the AV development process – from silicon to software to AI training and testing .

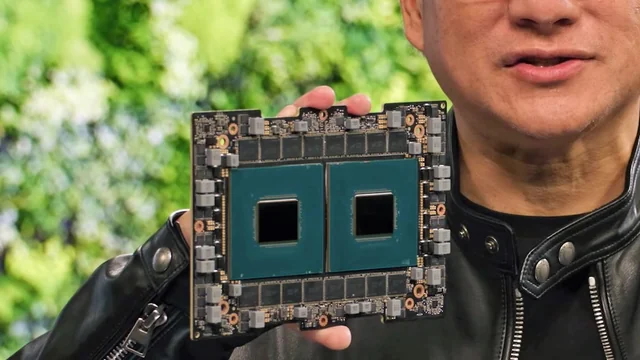

Data Center Advancements – Grace Blackwell Superchips and Exaflop AI Racks

NVIDIA’s GTC announcements highlighted major leaps in data centre AI infrastructure with the Grace Blackwell platform now in full production. The Blackwell-generation GPUs, combined with NVIDIA’s Grace CPU, are enabling servers and clusters of unprecedented scale and speed. Jensen Huang revealed that NVIDIA has effectively built a “1 exaflops computer in one rack” by disaggregating NVLink (the GPU interconnect) and moving to advanced liquid cooling . In the new architecture, multiple Grace-Blackwell “superchips” are linked via fifth-generation NVLink Switch fabric, so that 72 Blackwell GPUs plus 36 Grace CPUs can operate as a single giant GPU in a rack . This 72-GPU pool, called GB200 NVL72, delivers on the order of 1.4 exaflops of AI computing (FP8) and packs 13.4 TB of HBM3e memory with 576 TB/s of memory bandwidth in one rack . Such a system achieves 30× faster LLM inference throughput than the same number of previous-gen GPUs , while reducing energy and cost per workload dramatically.

Key to this is the integration of NVLink with InfiniBand networking, which allows scaling these supercomputers beyond a single rack. The NVLink Switch system inside the rack provides an astonishing 130 TB/s of low-latency GPU-to-GPU bandwidth for internal communication . For multi-rack or cloud scaling, NVIDIA’s Quantum InfiniBand links and BlueField-3 DPUs connect these NVLink domains together, effectively blending intra-node and inter-node networking . In simpler terms, NVIDIA has blurred the line between a server and a data center – a cluster of GPUs can behave like one monster GPU for AI. With these Grace-Blackwell HGX platforms, data centers can tackle trillion-parameter AI models in real time, something previously impossible. Each year going forward will see new hardware like this, including an upcoming Blackwell Ultra platform later in the year to further boost training and “reasoning” inference performance . Overall, NVIDIA’s data center strategy – from photonics to unified memory to disaggregated NVLink – is driving AI infrastructure into the exascale era, enabling more complex AI workloads at lower energy and better efficiency than ever before .

NVIDIA Dynamo – The “AI Factory” Operating System

NVIDIA introduced NVIDIA Dynamo, an open-source platform described as “the operating system of an AI factory” . Dynamo isn’t an OS in the traditional sense, but rather a modular inference software framework for deploying and scaling AI models (especially reasoning and generative models) across vast computing clusters. Its goal is to make serving large AI models fast, efficient, and easy to scale – much like an OS manages hardware for applications, Dynamo manages GPU resources for AI workloads. It coordinates dynamic scheduling of tasks, intelligent request routing, memory optimisation, and high-throughput data handling across potentially thousands of GPUs . In NVIDIA’s testing, Dynamo could boost the throughput of a massive 72-GPU cluster by up to 30× for serving AI requests, maximising the “tokens per second” that generative AI services can output . This means AI services (like LLM-based assistants) can handle many more user queries at lower cost by using hardware more efficiently.

Crucially, Dynamo is open-source and backend-agnostic. It supports all major machine learning frameworks and inference backends – TensorRT-LLM, PyTorch, HuggingFace, you name it – so developers aren’t locked in . It also includes large-language-model specific optimisations, such as disaggregated inference serving. For example, Dynamo can split an LLM’s inference workload into two phases (prefill and decode) and run them on different GPU sets optimised for each . This kind of parallelism and specialisation yields higher throughput than running the entire model sequentially on one set of GPUs. In summary, NVIDIA Dynamo gives “AI factories” (large-scale AI datacenters) a powerful toolkit to deploy AI models with cloud-like scalability and reliability . By open-sourcing it, NVIDIA is inviting the industry to adopt and improve this “inference OS,” potentially making large AI models more accessible and affordable to run for enterprises. The advantage over ad-hoc solutions is that Dynamo is purpose-built for AI at scale – akin to going from hand-crafted machine code to a full-fledged operating system that can schedule and optimise workloads automatically.

DGX Station AI Workstation – Personal AI Supercomputer

NVIDIA is also bringing AI supercomputing power to the desk of every researcher and engineer with a new DGX StationAI workstation (revealed as Project “DIGITS” at CES). Essentially, this is a personal AI supercomputer powered by the NVIDIA Grace–Blackwell “GB10” superchip, offering server-class performance in a quiet, plug-and-play tower . Despite its compact size, each DGX Station packs one Grace CPU paired with one Blackwell GPU on the same module, delivering about 1 petaFLOP of AI performance (at FP4 precision) – on par with a small data center node . In practical terms, that’s enough muscle to train or run AI models with up to ~200 billion parameters on your desk . The system includes 128GB of unified memory (shared between the CPU and GPU) for handling large models, and NVMe SSD storage for datasets . Impressively, it runs on a standard wall power outlet and uses advanced cooling so that any lab or office can deploy it without special infrastructure .

The DGX Station is aimed at letting developers prototype, fine-tune, and experiment with AI models locally before scaling up to cloud or data center clusters . NVIDIA envisions a seamless workflow: you develop and test your model on the DGX Station under your desk, then when it’s ready for larger training or deployment, you can transition to NVIDIA’s larger systems or DGX Cloud – since it’s the same architecture and software stack. By having this AI horsepower on hand, data scientists and students can iterate faster and without needing constant cloud access. Jensen Huang noted that “with Project DIGITS, the Grace Blackwell superchip comes to millions of developers”, essentially democratising access to AI computing . The DGX Station (and its sibling, DGX Spark, a similar personal AI system) will be made available through NVIDIA’s OEM partners like HP, Dell, Lenovo and others . In summary, DGX Station is bringing supercomputer-class AI capabilities to the personal workstation level, empowering researchers to engage with cutting-edge AI models directly and accelerating innovation on a broad scale .

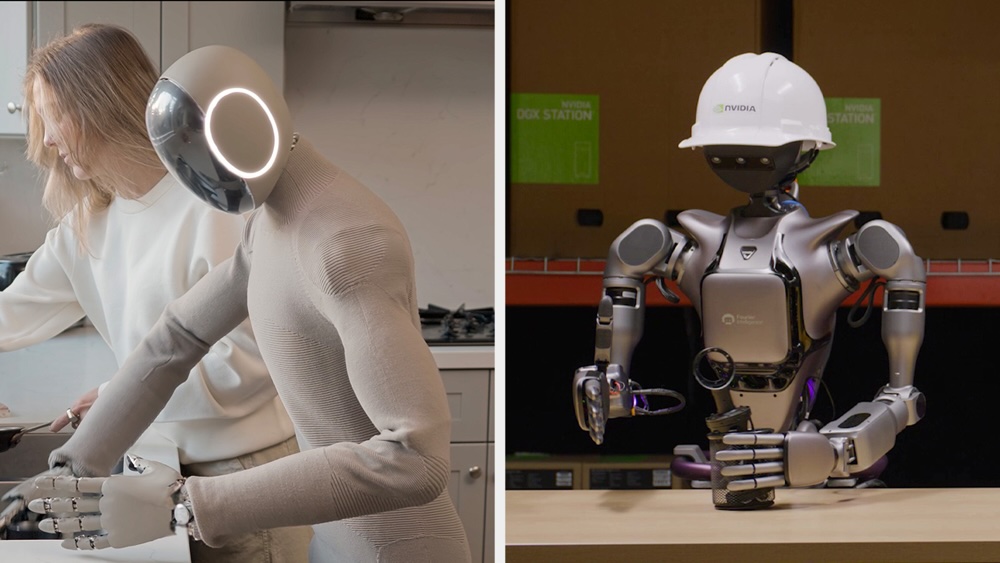

NVIDIA Robotics – Tackling a 50M Worker Shortfall with AI Robots

Physical robots and automation took center stage at GTC as NVIDIA outlined its vision to address an impending global labor shortage with advanced AI robotics. Jensen Huang cited that by the end of this decade the world could be “at least 50 million workers short” across industries . This gap – in manufacturing, logistics, healthcare, and other sectors – is driving what he calls the next $10 trillion opportunity in robotics . To meet this challenge, NVIDIA announced a portfolio of “physical AI” solutions aimed at making robots more general-purpose, safer, and easier to develop. A cornerstone is the new Isaac AMR platform (and robotics software stack) that helps companies deploy autonomous mobile robots and manipulators to automate warehouse operations and factory workflows. But the most groundbreaking release is NVIDIA’s Isaac GR00T N1 model – the first AI foundation model for humanoid robots, which we’ll detail shortly . Alongside that, NVIDIA introduced simulation tools like an Omniverse-based Synthetic Data Generation Blueprint and a physics engine called “Newton” to boost robot training (in simulation) and skills transfer to the real world .

The combined aim of these technologies is to enable a new generation of intelligent robots that can adapt to many tasks and thus help fill labor shortages. By using AI brains like GR00T N1, a single robot could potentially learn multiple skills – from moving inventory and packing goods to inspecting products – rather than being built for just one repetitive task. Companies could retrain or reprogram robots for new jobs as needed, much like hiring flexible workers. NVIDIA’s CEO framed it as “the age of generalist robotics is here”, comparing it to the rise of generalist AI in language models . If successful, these general-purpose robots could significantly augment the human workforce, taking on physically taxing or mundane jobs and boosting productivity in critical sectors. The societal impact could be profound: mitigating worker shortages, driving economic growth, and even improving safety (by letting robots handle dangerous tasks). NVIDIA’s investment in robotics – from chips (Jetson edge AI modules) to cloud services (Isaac Sim) – underscores its belief that AI-powered robots will be an integral part of the future workforce, much as PCs and internet are today .

Newton – Open-Source Physics Engine by NVIDIA, DeepMind and Disney

A fascinating collaboration announced at GTC is “Newton”, a next-generation physics simulation engine being co-developed by NVIDIA, Google DeepMind, and Disney Research. Newton is an open-source physics engine purpose-built for robotics and virtual worlds . Its mission is to provide ultra-realistic, high-performance physics simulation to help train robots and AI models that interact with the physical environment. Today’s robots often learn using simulators (like Mujoco or Isaac Gym), and the more accurate the physics, the better the robot’s skills transfer to the real world. Newton is expected to take this to new heights by incorporating cutting-edge physics algorithms (Disney and DeepMind both have expertise here) and by leveraging GPU acceleration from NVIDIA. This could include everything from simulating the dynamics of robotic arms and grippers with high fidelity, to modeling contact forces, friction, and fluid dynamics in virtual environments.

The collaboration is noteworthy: DeepMind’s involvement suggests an interest in improving AI training simulations (they open-sourced the MuJoCo physics engine in 2021, which could inform Newton), and Disney Research brings expertise in realistic animation physics and robotics (for entertainment robots). NVIDIA’s role is providing the simulation platform (Omniverse) and ensuring Newton runs efficiently on GPUs for massive parallelism. The goal of Newton is to dramatically speed up and improve robot development – developers will be able to drop their robot models into a Newton-powered simulator and get extremely realistic motion and interaction, which in turn means an AI model trained in sim will perform better in the real world . Newton is set to be open-source and is expected to become available later in 2025 . Its applications go beyond humanoid robots; any field that needs physics simulation (drones, autonomous vehicles, virtual reality, games) could benefit from a state-of-the-art engine. In essence, Newton aims to be the physics backbone for the coming wave of AI and robotic systems, enabling them to learn and be tested in rich virtual worlds before they tackle our real one.

NVIDIA Isaac GR00T N1 – Open-Source Humanoid Robotics Model

Perhaps the most exciting robotics announcement is Isaac GR00T N1, described as the world’s first open, general-purpose foundation model for humanoid robots . In simple terms, GR00T N1 is an AI brain that a robot can use to understand commands and figure out how to perform a wide variety of physical tasks. Much like GPT-style models are trained on mountains of text to become fluent in language, GR00T N1 has been trained on an enormous dataset of robot actions (including countless simulated demonstrations) to develop a broad understanding of manipulation and movement. It features a two-part AI architecture loosely inspired by human cognition : “System 2” is a slow, deliberative reasoning module (a vision-language model) that observes the environment and plans complex actions, while “System 1” is a fast execution module that takes those plans and controls the robot’s movements in real time (mimicking human reflexes or intuition) . This combo allows GR00T N1 to break down high-level instructions into precise, coordinated motions. For example, if asked to “pick up the screwdriver and hand it to me”, the System 2 part will reason about the request and spatial scene, and System 1 will handle the fine motor control to reach out, grasp the screwdriver, and pass it over safely.

Out of the box, GR00T N1 can handle many common tasks required in industrial and everyday settings. It’s been shown to generalise skills like grasping objects (with one or both hands), moving and placing items, using tools, and even performing multi-step procedures that require combining basic skills in sequence . For instance, it could open a cupboard, grab a first-aid kit, and carry it to a person – a multi-step operation involving navigation, perception, and manipulation. Initial use cases highlighted include factory and warehouse jobs such as material handling, packaging, and inspection duties . The fact that GR00T N1 is a foundation model means it’s not tied to one specific robot design or task; it can be fine-tuned (“post-trained”) for different robot hardware or specialised tasks by feeding it additional data . NVIDIA has open-sourced GR00T N1’s model and even released a large synthetic dataset of robot trajectories used to train it, so researchers worldwide can build on it . The model is fully customisable – developers can teach it new skills or adapt it to their custom humanoid robots without having to start from scratch .

By releasing GR00T N1 openly, NVIDIA hopes to jumpstart a “generalist robot” ecosystem, analogous to how open AI models spurred innovation in NLP. Companies could take GR00T N1 and rapidly develop robots for roles that are hard to fill today. For example, a logistics firm could fine-tune GR00T for a bipedal warehouse robot that unloads delivery trucks, or a hospital could adapt it for a nurse-assistant robot that fetches and carries supplies. Over time, as the model and its successors improve, we may see humanoid or human-safe robots capable of seamlessly working alongside people in many environments. GR00T N1 essentially gives the robotics community a baseline AI brain that can reason and act in the physical world, which is a huge leap from having to program each new robot from the ground up. It addresses the labor shortage by aiming to make robots far more capable and versatile – potentially filling millions of job gaps in aging societies and elevating productivity globally . As Jensen Huang put it, “the age of generalist robotics is here,” and GR00T N1 is a key first step in that new era .

.jpg)